Proxies are crucial for ensuring anonymity, avoiding rate limits, and bypassing geo-restrictions when working with Python-based applications, especially for web scraping and automation. This article is intended to explore the concept of the Python proxy, the essentials of using proxies in Python, detailing how to configure them, leverage proxy libraries, and manage proxies effectively for various online tasks.

What is Python Proxy?

A proxy acts as an intermediary between your Python script and the target server, routing your requests through a different IP address. This helps mask your identity, enhance privacy, avoid IP bans, and distribute traffic across multiple endpoints, making it particularly useful in web scraping, data harvesting, and privacy protection.

Proxy Pattern Implemented in Python:

In software design, a proxy pattern involves creating a new class (the proxy) that mimics the interface of another class or resource, but adds some form of control or management functionality. This could be used for lazy loading, logging, access control, or other purposes. Python’s dynamic typing and rich class support make it a good language for implementing proxy patterns.

Why Use Proxy in Python?

Using a proxy in Python can significantly enhance both security and functionality when making network requests. Proxies act as intermediaries between a client and a server, allowing the client to route its requests through the proxy’s IP address instead of its own. This practice helps mask the client’s identity, which is essential for privacy and avoiding IP bans when scraping websites or accessing restricted content. Additionally, proxies can bypass geo-restrictions and improve request performance by load balancing. In Python, proxies are easily integrated into libraries like requests, making them a versatile tool for developers managing network interactions.

Here are some reasons to use Python proxies:

- Bypassing Restrictions: Python Proxy enables you to circumvent access restrictions imposed by firewalls, filters, or blocks based on the location. Using proxies from different locations or networks allows you to access content that may not be available in your area or network.

- Load Distribution and Scalability: Python Proxy allows you to distribute your requests across multiple servers. This can help you handle more requests at once and make your program more scalable.

- Anonymity and Privacy: Proxies allow you to conceal your IP address, providing additional privacy and security. By sending your requests through various proxy servers, you can prevent websites from discovering your actual IP address and tracking it.

- IP Blocking Mitigation: If you scrape a website or ask for many requests, you could be blocked if your behavior appears suspicious or exceeds a certain limit. Python Proxy servers help mitigate this risk by allowing you to switch among various IP addresses. This disperses your requests and reduces the likelihood of being blocked based on your IP address.

- Geographic Targeting: With Python proxies, you can make your requests appear as if they’re coming from different locations. This can be helpful when testing features that depend on your location or when obtaining regional information from websites.

- Load Distribution and Scalability: Python Proxy allows you to distribute your requests across multiple servers. This can help you handle more requests at once and make your program more scalable.

- Performance Optimization: Proxies that can cache can enhance performance by serving saved answers instead of sending repeated requests to the target server. This reduces the amount of data used and speeds up response times, especially for frequently used services.

- Testing and Development: Python Proxy enables you to capture and view network data, making them useful tools for testing and debugging. How your Python script communicates with the target server may be demonstrated by the requests and responses.

- Versatility and Flexibility: Python Requests and proxies can be applied to perform a quite wide range of tasks related to the web. No matter you’re pulling data, managing processes, or using APIs, this combination allows you to alter and customize your requests to meet your needs.

Python Proxies: Innovative Approach to Web Scraping

How to Build a Proxy Server in Python

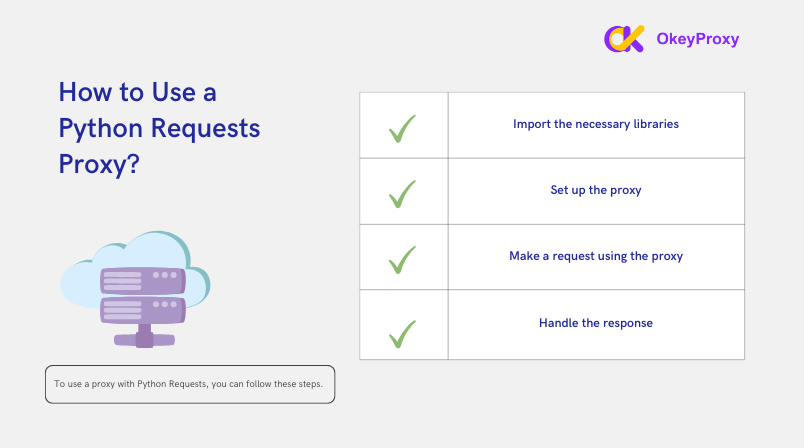

Setting up a proxy in Python is straightforward. Below are the basic steps to integrate a proxy in your web scraping or automation script:

- Install Required Libraries: Use popular libraries such as

requestsorhttpxto configure proxies. - Choose a Proxy Type: Decide whether you want to use HTTP, HTTPS, SOCKS5, or residential proxies depending on your requirements.

- Configure the Proxy: Set the proxy URL in the request to route traffic through the proxy server.

- Handle Errors: Implement error handling to catch proxy connection failures, timeouts, or blocked requests.

Setting Proxy in Requests Python

To set up a proxy using Python requests, confirm the necessary permissions and legal rights to use the configured Python proxy.

The requests library is a popular Python package for sending various HTTP requests. You can install it with pip, the Python package installer. Pip is usually installed automatically when you install Python, but you can install it separately when you need it.

-

Open command prompt

A. Windows: Search for “CMD” or “Command Prompt” in the Start menu.

B. MacOS: Open Terminal from Applications > Utilities.

C. Linux: Open Terminal from the Applications menu.

-

Check if Python is installed

Before installing the library, it’s a good idea to check if Python is already installed.

-

Check if pip is installed

Check if pip is installed. Most modern Python installations come with PIP preinstalled.

After you successfully install the requests library get ready to make HTTP requests in Python now.

Example of using Python requests proxy

import requests

# Example of setting a proxy

proxies = {

'http': 'http://user:password@proxy.example.com:8080',

'https': 'https://user:password@proxy.example.com:8080',

}

response = requests.get('https://example.com', proxies=proxies)

print(response.content)

Note: While the requests library provides a straightforward way to use Python proxy, more complex applications may require advanced libraries like Scrapy. Scrapy is a Python framework for large-scale web scraping, which provides all the tools needed to extract data from websites, process it, and store it in the preferred format and supports rotating proxies, such as OkeyProxy.

Advanced Python Proxy Libraries

Beyond the basic requests library, several Python libraries offer advanced proxy management features. Here’s a look at some innovative solutions:

- httpx: A modern, asynchronous HTTP client that supports proxy rotation and concurrent requests for faster scraping.

- Selenium: Widely used for web automation, Selenium can be configured with proxies to manage headless browser sessions effectively.

- PySocks: A lightweight SOCKS proxy wrapper for Python’s socket module, perfect for handling SOCKS5 proxies.

Example of using Python httpx proxy

import httpx

# Using httpx with a proxy

proxies = {

'http://': 'http://proxy.example.com:8080',

'https://': 'https://proxy.example.com:8080'

}

async with httpx.AsyncClient(proxies=proxies) as client:

response = await client.get('https://example.com')

print(response.text)

Management of Python Proxy for Scale

Rotating Proxies in Python

In situations which extensive web scraping is required, rotating proxies become necessary to prevent the proxy server’s IP from being blocked. Python simplifies this process.

Developers can create a list of Python proxies and select a different one for each request:

import requests

import random

proxy_list = ["http://proxy1.com:3128", "http://proxy2.com:8080", "http://proxy3.com:1080"]

url = "http://example.org"

for i in range(3):

proxy = {"http": random.choice(proxy_list)}

response = requests.get(url, proxies=proxy)

print(response.status_code)Also, with a pool of Python proxies, scripts can switch IP addresses after every request or at set intervals:

from itertools import cycle

# List of proxies

proxy_pool = cycle([

'http://proxy1.example.com:8080',

'http://proxy2.example.com:8080',

'http://proxy3.example.com:8080'

])

# Rotate through the proxies

for i in range(10):

proxy = next(proxy_pool)

response = requests.get('https://example.com', proxies={"http": proxy, "https": proxy})

print(response.status_code)

Proxy Authentication with Python

Some proxies require authentication. Python can handle proxies that need usernames and passwords, ensuring that requests are routed securely through private proxy networks.

proxies = {

'http': 'http://user:password@proxy.example.com:8080',

'https': 'https://user:password@proxy.example.com:8080'

}

response = requests.get('https://example.com', proxies=proxies)

Python Proxy Failover and Erro

Not all proxies are reliable. Implementing error handling and failover mechanisms ensures that your Python script continues to run even when a proxy fails. Use retry mechanisms to avoid disruptions.

import requests

from requests.exceptions import ProxyError

# Basic proxy failover logic

proxies = ['http://proxy1.example.com:8080', 'http://proxy2.example.com:8080']

for proxy in proxies:

try:

response = requests.get('https://example.com', proxies={'http': proxy})

if response.status_code == 200:

print('Success with', proxy)

break

except ProxyError:

print(f'Proxy {proxy} failed. Trying next...')

Powerful Python Proxy for Reliability

Supported with HTTP(s) and SOCKS protocol, a ideal Python Proxy is a necessary tool to run the script of web scraping or monitoring, OkeyProxy provides 150 million+ real and compliant residential IPs, helping to rotate proxies with IP addresses and eliminating concerns about a single Python proxy IP failing, thus minimizing the risk of the real IP being blocked as much as possible!

Future Trends and Advanced Strategies for Python Proxy

AI-Enhanced Python Proxies Management

Incorporating machine learning and AI into proxy management can optimize proxy selection and rotation by analyzing response times, success rates, and failure patterns. Python libraries such as scikit-learn can be integrated to make smarter proxy decisions.

Combination between Python Proxies and CAPTCHA Solvers

As websites increasingly use CAPTCHAs to block bots, combining proxies with CAPTCHA-solving services can increase the success rate of web scraping operations. Integrating CAPTCHA solvers such as 2Captcha or Anti-Captcha with Python Requests ensures that your script can overcome these challenges.

Conclusion

Proxies are an essential component in Python programming, offering a range of benefits from maintaining anonymity to facilitating efficient web scraping and load balancing. Developers can create more robust, flexible, and secure applications by understanding how to implement and utilize proxies like OkeyProxy in Python. When used responsibly and ethically, the power of proxies can significantly enhance Python applications, opening up new possibilities in the world of network communication.