Data is the cornerstone of competitive analysis, market research, and business strategy. One of the most valuable sources of data for e-commerce businesses is Amazon, the world’s largest online marketplace. Scraping a seller’s products on Amazon can provide insights into pricing strategies, product offerings, and customer reviews, which are crucial for making informed business decisions.

This article delve into the process of scraping a seller’s products on Amazon, covering essential tools, techniques, and best practices while addressing legal and ethical considerations.

Amazon’s Data Structure Include?

Amazon’s website is structured in a way that categorizes products, reviews, pricing, and other details. To scrape product data effectively, it is crucial to understand the following components:

- Product Listings: Contains details such as product name, description, price, and images.

- Seller Information: Includes seller ratings, number of products, and seller name.

- Reviews and Ratings: Provides customer feedback and product ratings.

- Product Categories: Helps in filtering and organizing products.

Scrape a Seller’s Products on Amazon Step by Step

Scraping a seller’s products on Amazon requires a detailed and structured approach, particularly due to Amazon’s sophisticated anti-scraping measures. Below is a comprehensive tutorial, covering various aspects of the process, from setting up the environment to dealing with challenges like CAPTCHAs and dynamic content.

1. Preparation of Web Scraping

Before diving into the scraping process, ensure that your environment is set up with the necessary tools and libraries.

a. Tools and Libraries

- Python: Preferred for its rich ecosystem of libraries.

- Libraries:

requests: For making HTTP requests.BeautifulSoup: For parsing HTML content.Selenium: For handling dynamic content and interactions.Pandas: For data manipulation and storage.Scrapy: If you prefer a more scalable, spider-based scraping approach.

- Proxy Management:

requests-ip-rotator: A library for rotating IP addresses.- Proxy services like

OkeyProxyfor rotating proxies.

- CAPTCHA Solvers:

- Services like

2CaptchaorAnti-Captchafor solving CAPTCHAs.

- Services like

b. Environment Setup

- Install Python (if not already installed).

- Set up a virtual environment:

python3 -m venv amazon-scraper source amazon-scraper/bin/activate - Install necessary libraries:

pip install requests beautifulsoup4 selenium pandas scrapy

2. Understanding Amazon’s Anti-Scraping Mechanisms

Amazon employs various techniques to prevent automated scraping, which are challenges for data collections:

- Rate Limiting: Amazon limits the number of requests you can make in a short period.

- IP Blocking: Frequent requests from a single IP can lead to temporary or permanent bans.

- CAPTCHAs: These are presented to verify if the user is human.

- JavaScript-Based Content: Some content is dynamically loaded using JavaScript, which requires special handling.

3. Locating the Seller’s Products

a. Identify Seller ID

To scrape a specific seller’s products, you first need to identify the seller’s unique ID or their storefront URL. The URL usually follows this format:

https://www.amazon.com/s?me=SELLER_IDYou can find this URL by visiting the seller’s storefront on Amazon.

b. Fetch Product Listings

With the seller’s ID or URL, you can begin fetching the product listings. Since Amazon’s pages are often paginated, you’ll need to handle pagination to ensure that all products are scraped.

import requests

from bs4 import BeautifulSoup

seller_url = "https://www.amazon.com/s?me=SELLER_ID"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36"

}

def get_products(seller_url):

products = []

while seller_url:

response = requests.get(seller_url, headers=headers)

soup = BeautifulSoup(response.content, "html.parser")

# Extract product details

for product in soup.select(".s-title-instructions-style"):

title = product.get_text(strip=True)

products.append(title)

# Find the next page URL

next_page = soup.select_one("li.a-last a")

seller_url = f"https://www.amazon.com{next_page['href']}" if next_page else None

return products

products = get_products(seller_url)

print(products)4. Handling Pagination

Amazon product pages are often paginated, requiring a loop to go through each page. The logic for this is included in the get_products function above, where it checks for the presence of a “Next” button and extracts the URL for the subsequent page.

5. Handling Dynamic Content

Some product details, like price or availability, may be loaded dynamically using JavaScript. In such cases, you’ll need to use Selenium or a headless browser like Playwright to render the page before scraping.

Using Selenium for Dynamic Content

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

from bs4 import BeautifulSoup

# Setup Chrome options

chrome_options = Options()

chrome_options.add_argument("--headless") # Run in headless mode

chrome_options.add_argument("--no-sandbox")

chrome_options.add_argument("--disable-dev-shm-usage")

# Start Chrome driver

service = Service('/path/to/chromedriver')

driver = webdriver.Chrome(service=service, options=chrome_options)

# Open the seller's page

driver.get("https://www.amazon.com/s?me=SELLER_ID")

# Wait for the page to load completely

driver.implicitly_wait(5)

# Parse the page source with BeautifulSoup

soup = BeautifulSoup(driver.page_source, "html.parser")

# Extract product details

for product in soup.select(".s-title-instructions-style"):

title = product.get_text(strip=True)

print(title)

driver.quit()6. Dealing with CAPTCHAs

Amazon may present CAPTCHAs to block scraping attempts. If you encounter a CAPTCHA, you’ll need to either solve it manually or use a service like 2Captcha to automate the process.

Example of Using 2Captcha

import requests

captcha_solution = solve_captcha("captcha_image_url") # Use a CAPTCHA-solving service like 2Captcha

# Submit the solution with your request

data = {

'field-keywords': 'your_search_term',

'captcha': captcha_solution

}

response = requests.post("https://www.amazon.com/s", data=data, headers=headers)7. Proxy Management

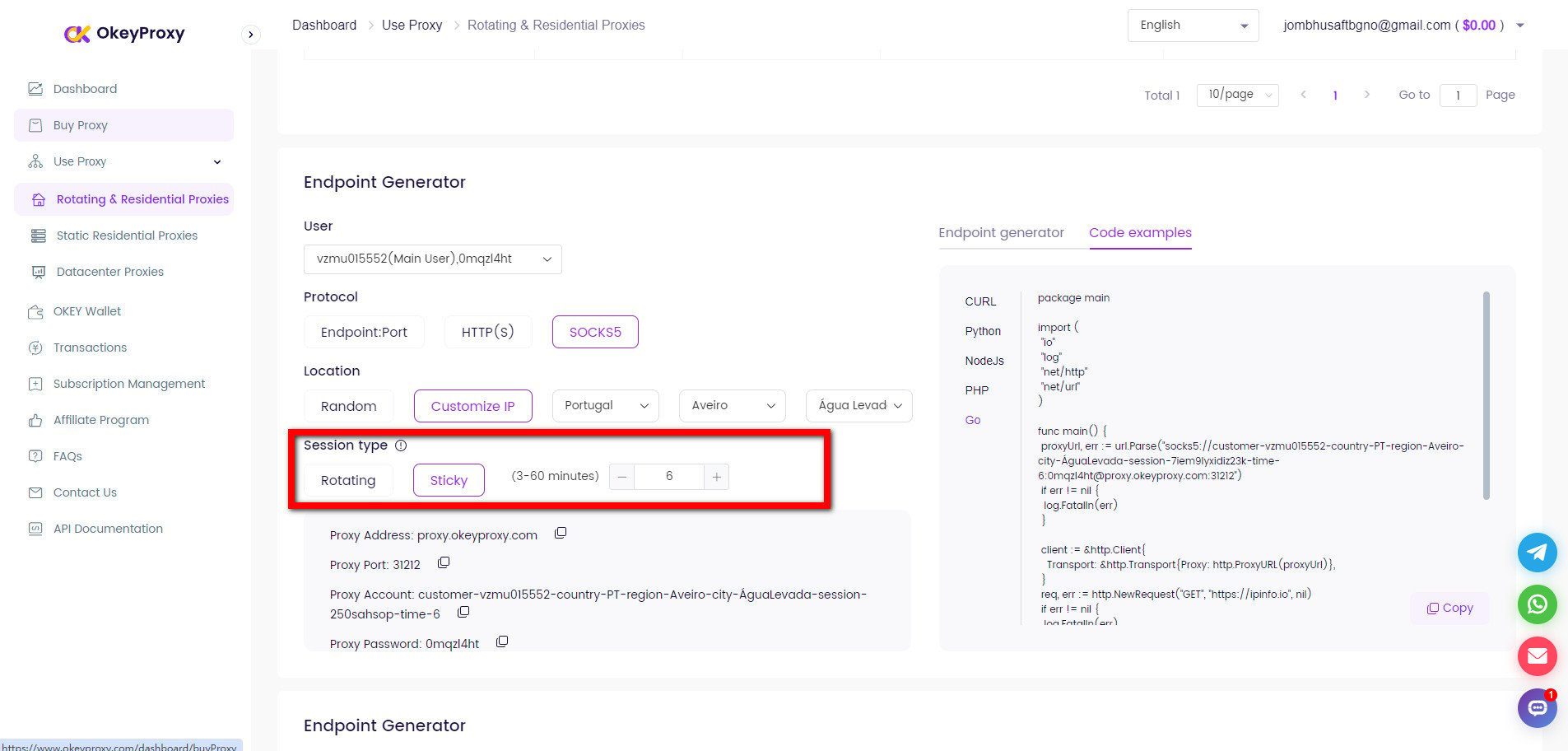

Scraping a seller’s products on Amazon is a common use case for proxies, especially for businesses engaged in price intelligence, competitor monitoring, or product research. Since Amazon employs strong anti-bot measures, proxies for data scraping are essential to bypass detection from Amazon.

To avoid IP blocking, it’s crucial to use rotating proxies. This can be achieved using a proxy management tool or service.

Setting Up Proxies with Requests

proxies = {

"http": "http://username:password@proxy_server:port",

"https": "https://username:password@proxy_server:port",

}

response = requests.get(seller_url, headers=headers, proxies=proxies)Rotate IP Address with OkeyProxy

OkeyProxy is a ideal proxy provider supported by patented technology, which provides 150 million+ real and compliant rotating residential IPs, quickly connecting to target websites in any country/region and easily bypassing blocking and bans of IP.

8. Data Storage

Once you’ve successfully scraped the data, store it in a structured format. Pandas is an excellent tool for this.

Saving to CSV with Pandas

import pandas as pd

# Assuming products is a list of dictionaries

df = pd.DataFrame(products)

df.to_csv("amazon_products.csv", index=False)9. Best Practices and Challenges

- Respect robots.txt: Always adhere to the guidelines specified in Amazon’s

robots.txtfile. - Rate Limiting: Implement rate-limiting strategies to prevent overloading Amazon’s servers.

- Error Handling: Be prepared to handle various errors, including request timeouts, CAPTCHAs, and page not found errors.

- Testing: Test your scraper thoroughly in a controlled environment before running it at scale.

- Legality: Ensure that your scraping activities are in compliance with legal regulations and Amazon’s terms of service.

10. Scaling the Scraping Process

For large-scale scraping operations, consider using a framework like Scrapy or deploying your scraper on a cloud platform with distributed crawling capabilities.

Other Method for Scraping Amazon Seller Products

Amazon provides APIs like the Product Advertising API for accessing product information. Although this method is legitimate and supported by Amazon, it requires API access approval and is limited in scope.

-

Pros:

Officially supported, reliable.

-

Cons:

Limited access, requires approval, and may involve usage costs.

FAQs about Scraping Data from Amazon

Q1: Is it legal to scrape Amazon for product data?

A: Scraping Amazon without permission may violate their terms of service and could result in legal consequences or blocking of IP addresses. Always consult legal counsel before proceeding.

Q2: How to avoid getting blocked while scraping Amazon?

A: Using proxies to rotate IP, respect robots.txt, implementing delays between requests, avoiding scraping too frequently, etc., some measures could minimize the risk of being blocked by Amazon.

Q3: Why my scraping script stops working?

A: Verify if Amazon has changed its website structure or implemented new anti-scraping measures and adjust the script to accommodate any changes. Also, regularly check and maintain the script to ensure continued functionality.

Summary

Scraping a seller’s products on Amazon involves identifying the seller’s unique URL, navigating through paginated product listings, and handling dynamic content with tools like Selenium. Due to Amazon’s anti-scraping measures, such as CAPTCHAs and rate limiting, it’s essential to use rotating proxies and consider compliance with their terms of service. Using libraries like BeautifulSoup for static content and Selenium for dynamic content, along with careful management of IP addresses and rate limits, can help efficiently extract and store product data while minimizing the risk of being blocked.