If you shop at Walmart regularly, you might wonder when a certain item will go on sale or have a special offer. Manually checking prices every day can be a hassle, but with a bit of tech to create a Walmart price tracker, you can automate the process to monitor price changes and get notified when the price drops.

This guide will walk you through building your own Walmart price tracker step by step — from data scraping and storage to price monitoring and automatic alerts. Even if you’re new to programming, you’ll be able to follow along and get it working!

What Can Walmart Price Trackers Do?

Let’s say you’re planning to buy a new TV from Walmart, but you’re not sure if now is the best time to buy. Checking the price manually every day is time-consuming. That’s where a price tracking tool comes in handy.

-

Automatically monitor the product’s price 24/7.

-

Keep a record of historical price trends.

-

Instantly notify you via email or SMS when the price drops.

What You’ll Need to Get Started Tracking

Before diving in, make sure you have the following tools ready:

-

Python (recommended version 3.8 or higher) — for writing and running the script

-

Code editor (VS Code or PyCharm) — to make coding easier and more efficient

-

Walmart Developer Account (optional) — using the official API provides more stability

-

Proxy IPs (optional) — helps avoid getting blocked during frequent requests

Install Required Python Libraries

Open your terminal or command prompt and run the following command:

pip install requests beautifulsoup4 selenium pandas schedule smtplibIf you plan to use browser automation, you’ll also need to install ChromeDriver or geckodriver, depending on your browser.

How to Get Walmart Product Prices

Method 1: Official Walmart API (Recommended)

Walmart offers a developer API that lets you fetch product data directly.

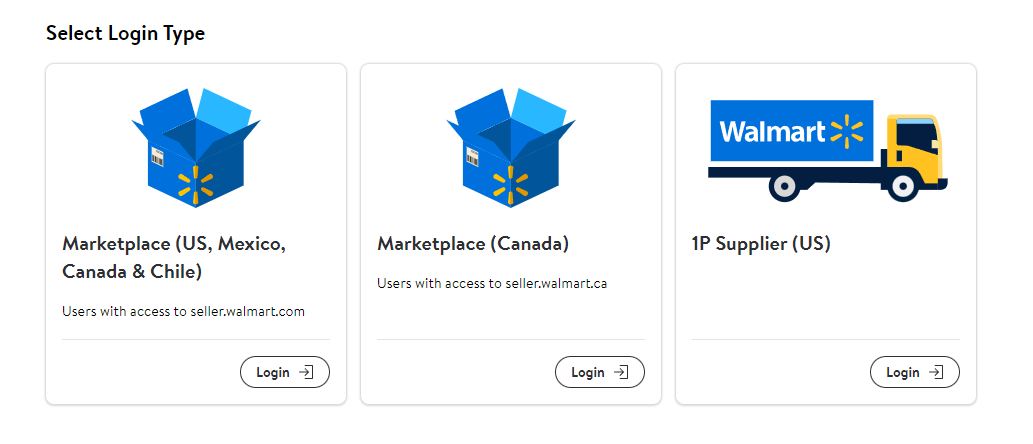

Step 1: Get an API Key

Visit the Walmart Developer Portal.

Create an account and apply for an API key (approval may take some time).

Step 2: Fetch Price Using Python

import requests

API_KEY = "YOUR_SCRAPERAPI_KEY"

PRODUCT_URL = "https://www.walmart.com/ip/123456789" # Replace with your product URL

def get_price():

response = requests.get(

f"http://api.scraperapi.com?api_key={API_KEY}&url={PRODUCT_URL}"

)

# Extract price from HTML (example simplified)

return "29.99" # Replace with actual parsing logic

price = get_price()

print(f"Current price: ${price}") Although this way is table, legal, and less likely to get blocked, it requires API access and may have rate limits.

Method 2: Scrape with a Web Crawler (Alternative)

If you don’t want to use the API, you can use tools like Selenium or BeautifulSoup to scrape the price directly from the product page.

Example: Extract the Price from Product Page Automatically Using Selenium

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

# Set up headless browser (runs without opening a window)

options = Options()

options.add_argument("--headless")

driver = webdriver.Chrome(options=options)

product_url = "https://www.walmart.com/ip/123456789" # Replace with your product URL

driver.get(product_url)

# Find the price element (you may need to tweak the XPath)

price_element = driver.find_element("xpath", '//span[@itemprop="price"]')

price = price_element.text

print(f"Current price: {price}")

driver.quit() # Close the browserWalmart has anti-scraping protections and frequent requests may get your IP blocked. This way, adding time.sleep(5) between requests to avoid hitting the site too quickly and utilizing rotating proxy IPs are recommended for reliability.

Walmart Scraping Detection Bypass [Optional]

The recommended request frequency for a single IP address is no more than 1 time per minute without triggering anti-bot protections. Therefore, when visiting and scraping Walmart pages frequently, using proxies offering clean IPs and changing UA regularly are essential to avoid being flagged and getting IP bans. Here’s how to manage and optimize your Walmart price tracker setup.

(1) Proxy Pool Management

For your IP address might get temporarily blocked due to too many scraping requests, resulting in a 403 Forbidden error, and Walmart product prices may vary by region — for example, U.S. and Canadian users might see different prices for the same item, a vast proxy pool with millions IPs to rotate is necessary.

OkeyProxy’s impressive network features 150M+ real IPs, spanning 200+ countries. Supporting all devices and use cases, including automatic IP rotation for scraping and tracking data. This proxy service offers competitive pricing, positioning it as the top pick for residential proxy scraper. It is offering a $3/GB PROXY TRIAL, allowing every users to experience the reliability, speed, and versatility of scraping proxies.

# Proxy pool – replace with your own proxy IPs

PROXY_POOL = [

"http://45.123.123.123:8080",

"http://67.234.234.234:8888",

"http://89.111.222.333:3128"

]

# Randomly select and validate a proxy from the pool

def rotate_proxy():

while True:

proxy = random.choice(PROXY_POOL)

if check_proxy(proxy):

return proxy

else:

print(f"Proxy {proxy} is not available, trying another...")

PROXY_POOL.remove(proxy)

raise Exception("No valid proxies left in the pool.")

# Check if a proxy is working by making a test request

def check_proxy(proxy):

try:

test_url = "https://httpbin.org/ip"

response = requests.get(test_url, proxies={"http": proxy}, timeout=5)

return response.status_code == 200

except:

return False(2) Anti-Bot Strategies

Randomize Request Headers

To avoid being detected as a bot, rotate your User-Agent with each request:

from fake_useragent import UserAgent

ua = UserAgent()

headers = {"User-Agent": ua.random}Add Random Delays Between Requests

Introducing a delay between requests helps mimic human behavior:

import time

import random

time.sleep(random.uniform(3, 10)) # Wait 3–10 seconds before the next requestThese strategies help reduce the risk of being blocked and improve the reliability of your scraper over time.

Saving Walmart Product Price History

After fetching the price, it’s important to store it so you can track historical trends over time.

Method 1: Store Data in an SQLite Database

import sqlite3

from datetime import datetime

# Create or connect to a database

conn = sqlite3.connect("walmart_prices.db")

cursor = conn.cursor()

# Create a table if it doesn't exist

cursor.execute('''

CREATE TABLE IF NOT EXISTS prices (

product_id TEXT,

price REAL,

date TEXT

)

''')

# Insert the new price data

cursor.execute("INSERT INTO prices VALUES (?, ?, ?)",

(product_id, price, datetime.now()))

conn.commit()

conn.close()Storing data in a local SQLite database after scraping Walmart.com is lightweight, which automatically creates files, without the need to install additional software.

Method 2: Store Data in a CSV File (Simpler)

import pandas as pd

from datetime import datetime

data = {

"product_id": [product_id],

"price": [price],

"date": [datetime.now()]

}

df = pd.DataFrame(data)

df.to_csv("prices.csv", mode="a", header=False) # Append modeBoth methods work well — use a database for more advanced querying or CSV for simplicity.

Alerts Daily of Walmart Price Tracks

1. Check Walmart Prices by Certain Route

Use the schedule library to automatically run your price-checking script at a specific time every day:

import schedule

import time

def check_price():

# Place your scraping and price-checking code here

print("Checking price...")

# Run the task every day at 9:00 AM

schedule.every().day.at("09:00").do(check_price)

while True:

schedule.run_pending()

time.sleep(60) # Check every minute for scheduled tasks2. Send Email Notifications When Price Drops

import smtplib

from email.mime.text import MIMEText

def send_email(price):

sender = "your_email@gmail.com"

receiver = "recipient_email@gmail.com"

password = "your_email_password_or_app_password"

msg = MIMEText(f"The product price has dropped! Latest price: ${price}")

msg["Subject"] = "Walmart Price Alert"

msg["From"] = sender

msg["To"] = receiver

with smtplib.SMTP("smtp.gmail.com", 587) as server:

server.starttls()

server.login(sender, password)

server.sendmail(sender, receiver, msg.as_string())Then, within your check_price() function, trigger the alert when the price is below your target:

if price = 100: # Set your desired price threshold

send_email(price)Walmart Price Tracker Deployment to the Cloud

If you want your Walmart price tracker to run continuously or at scheduled times, you can deploy script to the cloud using one of the following options:

-

GitHub Actions — Free and great for running scripts once a day

-

Cloud Servers (AWS, Azure, etc.) — Ideal for real-time monitoring

-

PythonAnywhere — Beginner-friendly, though the free version has some limits

Here is a example that upload scraping tool to Github after Walmart price tracking, which is more common.

Schedule Walmart Price Trackers Within GitHub

Firstly, create a GitHub repository.

Then add .github/workflows/tracker.yml:

name: Walmart Price Tracker

on:

schedule:

- cron: "0 14 * * *" # 2 PM UTC (adjust for your timezone)

jobs:

run-tracker:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Run Tracker

run: python tracker.pyBonus Features to Keep Track of Walmart Item Price

1. Monitor and Scrape Multiple Walmart Itens’ Data

You can easily track multiple products by storing them in a list and looping through them:

PRODUCT_LIST = [

{"id": "123456789", "name": "Wireless Earbuds", "threshold": 50},

{"id": "987654321", "name": "Smart Watch", "threshold": 200}

]

def monitor_multiple_products():

for product in PRODUCT_LIST:

price = get_price(product["id"])

if price = product["threshold"]:

send_email(f"{product['name']} just dropped to ${price}!")This way, you’ll get separate alerts for each item you’re watching — super handy during big sales events!

2. Visualize Price Trends from Saved Tracking Data

Want to analyze how the price changes over time? If you’re storing price data in a CSV or SQLite database, just plot historical trends using pandas and matplotlib:

import pandas as pd

import matplotlib.pyplot as plt

import sqlite3

def plot_price_history():

# Load data from SQLite database (or you can use pd.read_csv for CSV files)

conn = sqlite3.connect('walmart_prices.db')

df = pd.read_sql_query("SELECT * FROM prices", conn)

conn.close()

# Convert date column to datetime format

df['date'] = pd.to_datetime(df['date'])

# Optional: filter for a specific product

product_id = "123456789"

product_df = df[df['product_id'] == product_id]

# Plot

plt.figure(figsize=(10, 5))

plt.plot(product_df['date'], product_df['price'], marker='o', linestyle='-')

plt.title(f"Price Trend for Product {product_id}")

plt.xlabel("Date")

plt.ylabel("Price (USD)")

plt.grid(True)

plt.xticks(rotation=45)

plt.tight_layout()

plt.savefig("price_history.png")

plt.show()You could schedule this function to run weekly and automatically send the chart to your inbox!

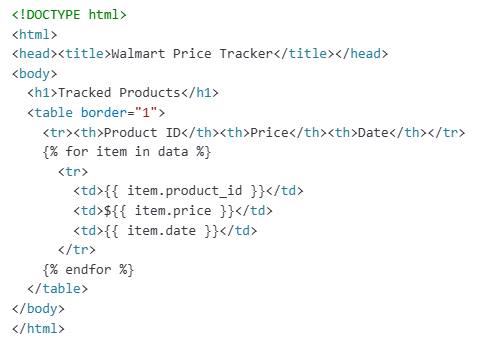

3. Build a Walmart Price Tracker’s Web Dashboard

You can create a simple Walmart Price Tracker panel using Flask or Streamlit to display product prices and trends in a web interface.

For instance, Flask serves HTML templates where you can show current prices, historical trends (as images or charts), and more. Here’s a basic example to get you started:

from flask import Flask, render_template

import pandas as pd

import sqlite3

app = Flask(__name__)

@app.route("/")

def index():

conn = sqlite3.connect("walmart_prices.db")

df = pd.read_sql_query("SELECT * FROM prices", conn)

conn.close()

latest_prices = df.sort_values("date").groupby("product_id").last().reset_index()

return render_template("index.html", data=latest_prices.to_dict(orient="records"))After that, create a simple HTML template (templates/index.html) to display the product prices.

Frequently Asked Questions (FAQ)

Q1: Why is my scraper getting blocked?

You’re sending too many requests in a short period of time. Use elite proxies to rotate IP addresses and add a random delay between each request to avoid hitting rate limits:

time.sleep(random.randint(2, 5)) # Wait 2–5 seconds before the next requestQ2: How do I find the product ID?

Go to the Walmart product page — the number that comes after /ip/ in the URL is the product ID. For example:

https://www.walmart.com/ip/123456789 → Product ID is 123456789Q3: Can I track multiple products at once?

Yes! Just create a list of product IDs and loop through them:

product_list = ["123", "456", "789"]

for product_id in product_list:

price = get_price(product_id)

save_price(product_id, price)You can also extend this to send separate alerts for each product if their prices drop.

Wrapping Up

That’s all! You now have a fully automated Walmart price tracker that monitors prices 24/7 and instantly notifies you when there’s a drop.

If you have deployed a walmart.com price tracker at scale, it’s important to respect Walmart’s robots.txt guidelines (view the rules here) and rotate proxy IPs accordingly.

With your custom-built Walmart price tracker, you can check prices while you sleep — and never miss a deal again!

Top-Notch Socks5/Http(s) Proxy Service

- Rotating Residential Proxies

- Static ISP Residential Proxies

- Datacenter Proxies

- More Custom Plans & Prices